Last month I talked about how I started collecting data from sp_whoisactive. The long term goal was to analyze and tune those long running processes that are sometimes hard to quantify in Query Store. We had started collecting the data in a table (whoisactiveLog), but wanted to make a simple table that our Engineers could refer to and find the long running processes. We also wanted to archive off the whoisactiveLog into another table and save that for 70 days in case it was needed for further research on the tuning of processes. Each night, we have a break in our processes where we can do this maintenance so we decided we would empty the whoisactiveLog table completely.

We decided that for the Analysis table, we would only collect processes that had been running for longer than 5 minutes. How would we be able to tell that they had been running for 5 minutes or longer? We looked at the SQLText and count the number of times for that session_id and start_time that text appeared.

We started by creating the WhoIsActiveAnalysis table and the WhoIsActiveArchive Table again in my dba schema:

CREATE TABLE [dba].[whoisactiveAnalysis](

[ProcessTime] [varchar](20) NULL,

[session_id] [smallint] NOT NULL,

[login_name] [nvarchar](128) NOT NULL,

[SQLText] [nvarchar](max) NULL,

[SQLCommand] [nvarchar](max) NULL,

[program_name] [nvarchar](128) NULL,

[start_time] [datetime] NOT NULL,

[collection_time] [datetime] NOT NULL,

[EstimatedMinutes] [int] NOT NULL

) ON [PRIMARY] TEXTIMAGE_ON [PRIMARY]

CREATE TABLE [dba].[whoisactiveArchive](

[dd hh:mm:ss.mss] [varchar](20) NULL,

[percent_complete] [varchar](30) NULL,

[host_name] [nvarchar](128) NULL,

[session_id] [smallint] NOT NULL,

[login_name] [nvarchar](128) NOT NULL,

[status] [varchar](30) NOT NULL,

[sql_text] [xml] NULL,

[wait_info] [nvarchar](4000) NULL,

[blocking_session_id] [smallint] NULL,

[blocked_session_count] [varchar](30) NULL,

[reads] [varchar](30) NULL,

[physical_reads] [varchar](30) NULL,

[writes] [varchar](30) NULL,

[tempdb_allocations] [varchar](30) NULL,

[tempdb_current] [varchar](30) NULL,

[CPU] [varchar](30) NULL,

[used_memory] [varchar](30) NULL,

[open_tran_count] [varchar](30) NULL,

[sql_command] [xml] NULL,

[database_name] [nvarchar](128) NULL,

[program_name] [nvarchar](128) NULL,

[start_time] [datetime] NOT NULL,

[login_time] [datetime] NULL,

[request_id] [int] NULL,

[collection_time] [datetime] NOT NULL

) ON [PRIMARY] TEXTIMAGE_ON [PRIMARY]

Next I created my stored procedure to load the Analysis table, load the Archive table, remove anything older than 70 days from the Archive table (we don’t want to keep too much) and empty the Log table so it can start fresh in the morning.

/*Get only what has been running longer than 5 minutes

and only bring in one record for what has been running longer than 5 minutes.

There may be multiple records for the same process if there are multiple steps taking longer

than 5 minutes.*/

CREATE PROC [dba].[LoadWhoIsActiveAnalysis]

AS

INSERT INTO [dba].[whoisactiveAnalysis]

([ProcessTime]

,[session_id]

,[login_name]

,[SQLText]

,[SQLCommand]

,[program_name]

,[start_time]

,[collection_time]

,[EstimatedMinutes])

SELECT AL.[dd hh:mm:ss.mss] AS ProcessTime

,AL.[session_id]

,AL.[login_name]

,CAST(AL.[sql_text] AS nvarchar(MAX)) AS SQLText

,CAST(AL.[sql_command] AS nvarchar(MAX)) AS SQLCommand

,AL.[program_name]

,AL.[start_time]

,AL.[collection_time]

,AG.StepCount AS EstimatedMinutes

FROM [dba].[whoisactiveLog] AL

INNER JOIN (SELECT MAX([dd hh:mm:ss.mss]) AS ProcessTime

, COUNT([dd hh:mm:ss.mss]) AS StepCount

, CAST(sql_text AS nvarchar(MAX)) AS SQLText

, session_id

, start_time

FROM [dba].[whoisactiveLog]

GROUP BY CAST(sql_text AS nvarchar(MAX))

, start_time

, session_id

HAVING COUNT([dd hh:mm:ss.mss]) > 5) AG ON AL.session_id = AG.session_id AND AL.[dd hh:mm:ss.mss] = AG.ProcessTime

/*Insert everything from the Log into the Archive*/

INSERT INTO [dba].[whoisactiveArchive]

([dd hh:mm:ss.mss]

,[percent_complete]

,[host_name]

,[session_id]

,[login_name]

,[status]

,[sql_text]

,[wait_info]

,[blocking_session_id]

,[blocked_session_count]

,[reads]

,[physical_reads]

,[writes]

,[tempdb_allocations]

,[tempdb_current]

,[CPU]

,[used_memory]

,[open_tran_count]

,[sql_command]

,[database_name]

,[program_name]

,[start_time]

,[login_time]

,[request_id]

,[collection_time])

SELECT [dd hh:mm:ss.mss]

,[percent_complete]

,[host_name]

,[session_id]

,[login_name]

,[status]

,[sql_text]

,[wait_info]

,[blocking_session_id]

,[blocked_session_count]

,[reads]

,[physical_reads]

,[writes]

,[tempdb_allocations]

,[tempdb_current]

,[CPU]

,[used_memory]

,[open_tran_count]

,[sql_command]

,[database_name]

,[program_name]

,[start_time]

,[login_time]

,[request_id]

,[collection_time]

FROM [dba].[whoisactiveLog]

/*Rolling Delete to remove anything older than 70 days from the Archive*/

DELETE

FROM [dba].[whoisactiveArchive]

WHERE collection_time < GETDATE()-70

/*Turncate after the archive has been completed to prepare for tomorrow with empty table*/

TRUNCATE TABLE [dba].[whoisactiveLog]

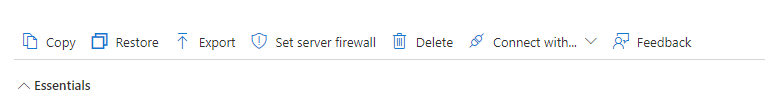

After creating this stored procedure I was ready to create a pipeline that executes once a day to do my Analysis and clean my tables. So far it has been great.

The song for this post is Follow Your Arrow by Kacey Musgraves.